Getting Started with Landbot

Introduction to Landbot 🌍

Creating & Setting Up Your Account 👥

How to create your Landbot account, set it up and invite teammates

Common reasons for not receiving account activation email

Trial period

Account Settings

Build Your First Bot 🛠️

Builder Interface Tour

Getting started - build a bot

Managing Data in Your Chatbot: A Guide to Using Fields

Languages and Translations in Landbot

Organize your flow with Bricks

Using Flow Logic in Landbot

How to Test Your Bot - Complete Guide 🧪

How to "debug" (troubleshoot) your bot's flow to spot possible errors (for non coders)

Starting Point

How to Import a Chatbot Flow Without JSON – Use "Build It For Me" Feature

Launch and Share Your Bot 🚀

Build a bot

Bot's Settings

Bot General Settings

Web bots: Second Visit Settings

Custom System Messages

Hidden Fields (Get params / UTMs from url and use it as variables)

Landbot native SEO & Tracking tools

Typing Emulation (Message Delay)

Messages, Questions and Logic & Technical blocks

Messages

Media Block

Media block

How to display images with a variable URL source

How to embed a .gif file inside a message

Different ways to embed Videos in Landbot

Display video and hide button to continue until video has ended

Send a Message block - Simple Message

Goodbye block

Question blocks

Date Block

Scale Block

Buttons block

Ask for a Name block

Ask for an Email block

Ask a Question block

Ask for a Phone block

Forms block

Multiple-Choice Questions with the Buttons Block

Question: Address block

Question: Autocomplete block

Question: File block

Question: Number block

Question: Picture Choice block

Question: Rating block

Question: URL block

Question: Yes/No block

Logic & Technical blocks

Code Blocks

Dynamic Data

How to Use the Dynamic Data Block in Landbot

Get the array's index of the user selection and extract information from array

Formulas

How to Perform Basic Calculations

Get started with the Formulas block

Formulas Blocks Dashboard

Formulas - Regex

Formulas - Date

Formulas - String

Formulas - Logical

Formulas - Math

Formulas - Object

Formulas - Comparison

Formulas - Array

Persistent Menu

Trigger Automation

Webhook

How to Use the Webhook Block in Landbot: A Beginner's Guide

Webhook Block Dashboard

Webhook Block for Advanced Users

Landbot System Fields: Pre-created fields

Set a Field block

Any of the above Output

Global Keywords 🌍

Keyword Jump

Lead Scoring block

Jump To block

AB Test

Conditions block

Conditions block II (with Dates, Usage and Agents variables)

Close Chat block

How to ask a question based on a variable not being set (empty URL params)

Business Hours block

Custom Goals

Note block

Share & Embed

Redirect Users

How to open a new URL in another tab (window)

How to redirect visitors to a URL (web only)

How to add a Click-to-Call/Email/WhatsApp button

Redirect User Based on Language Input (DeepL)

Generate a URL that has variables from user answers

Popup on Exit Intent

Share

Customized Embed Actions

How to redirect user to another url in your site with Livechat open to continue conversation

How to Detect Visitors Browser

Customize and embed your WhatsApp Widget

Modifying Embed Size

Detect if bot was opened

Customized Behavior in Mobile Browsers

Load script and display bot on click button

Launch Bot On Exit Intent

Display Bot During Business Hours Only (Livechat & Popup)

Open / Close a Web bot (embedded)

Launching a bot depending on browser language

How to pass WordPress logged in user data to Landbot

Set the flow depending on the url path (for embedded landbots)

How to launch a Landbot by clicking a button

Open LiveChat bot as soon as page loads

Detect if a visitor is on Mobile/Tablet or Desktop

Embed

Embed your bot into your website and use a custom domain

Embed Landbot in an iframe

Landbot in Wix

Landbot in your web with Google Tag Manager

Landbot in Webflow

Embed in Sharetribe

Landbot in Shopify

Embedding Landbot in Carrd

Landbot in Wordpress

Landbot in Squarespace

Customizing the Proactive Message

Design section (web bots)

Verification & Security

Validate phone number with SMS verification (with Vonage Verify)

Cookie consent banner (full page / full page embed)

Add Captcha Verification (Non-Embedded Bots)

Bricks

How to disable a bot

Account Settings and Billing

Billing

Privacy and Security

Teammates

Agent Status and Log out

Roles & Permissions for Teammates

Manage Landbot Teammates - Add and Customize Agents

Startup Discounts

NGOs and Educational Organizations Discount

AI in Landbot

Landbot AI Agent

AI Agent - Interactive components

AI Agent Block

AI Agent Setup - Best Practices

Tips to migrate from old AI Assistants to AI Agents

How to create custom Instructions for your Landbot AI Agent with AI (ChatGPT, Claude...)

AI Agent In Action - Live Implementation Example

Capture, generate and use data with AI Agents

Structuring your AI Agent Knowledge Base

Custom AI Integrations

Create a JSON format response from OpenAI in WhatsApp

Responses API

Connect OpenAI Assistant with Landbot

AI in WhatsApp

How to build a FAQ chatbot with GPT-3

GPT-4 in Landbot

OpenAI

Prompt Engineering for GPT-3

Build a Customer Service Bot with ChatGPT and Extract Information

Google Gemini in Landbot

Build a Chatbot with DeepSeek

AI Task block Overview

Integrations with Landbot

Native Integrations

Airtable

Airtable integration block

Get data filtered from Airtable with a Brick- Shop example

20 different ways to GET and filter data from Airtable

How to add/update different field types in Airtable (POST, PATCH & PUT)

How to Create, Update, Retrieve and Delete records in Airtable (POST, PATCH, GET & DELETE)

Get more than 100 items from Airtable

Insert Multiple Records to Airtable with a Loop

How to Get an Airtable Token

Advanced filters formulas Airtable block

Airtable usecase: Create an event registration bot with limited availability

Update Multiple Records in Airtable Using a Loop

Reservation bot with Airtable

Calendly

Dialogflow

Dialogflow & Landbot course

Dialogflow & Landbot intro: What is NLP, Dialogflow and what can you do with it?

Dialogflow & Landbot lesson 1: Create your first agent and intent in Dialogflow

Dialogflow & Landbot lesson 2: Get the JSON Key

Dialogflow & Landbot lesson 3: Setting up of Dialogflow in Landbot

Dialogflow & Landbot lesson 4: Training phrases and responses for a FAQ

Dialogflow & Landbot lesson 5: Entities and Landbot variables

Dialogflow & Landbot lesson 6: Redirect user depending on Dialogflow response parameters (intent, entities and more)

Learn more about Dialogflow - Courses and communities

Integrations > Dialogflow Block

How to extract parameters from Dialogflow response with Formulas

Dialogflow Integration Dashboard

Dialogflow in Unsupported Languages (& Multilingual)

Dialogflow - How to get JSON Key

Google Sheets

Google Sheets Integration: Insert, Update and Retrieve data

How to use Google Sheets to create a simple verification system for returning visitors

How to Upload Document & store link

How to give unique Coupon Codes (with Google Spreadsheets)

Google Sheets Integration Dashboard

How to insert a new row with data and formulas in Google Sheets

How to use Google Spreadsheet as a Content Management System for your bot

Hubspot

MailChimp

Salesforce

Segment

SendGrid

Send an Email

Sendgrid Integration Dashboard

How to create a custom SendGrid email - (Custom "from" email)

Slack

Stripe

Zapier

How to Configure the Landbot and Zapier Integration Using the Zapier Block

Zapier Integration Dashboard

How to insert a row to Google Spreadsheet by Zapier

How to generate a document with PDFMonkey by Zapier

Send WhatsApp Templates from Zapier

How to Send Emails from Your Landbot Using Gmail via Zapier

Get Opt-ins (Contacts) from Facebook Leads using Zapier

How to extract data from an external source with Zapier and use it in Landbot

Zapier trigger

How to complete a digital signature flow by Zapier

Make a survey with Landbot and display the results in a Notion table using Zapier

Custom Integrations

ActiveCampaign

Google Calendar

Google Fonts

Google Maps

Embed Google Maps

Google Maps API Key for Address block

Extract Data With Google Maps Geocoding API

Calculate Distances With Google Maps API

Google Meet

IFTTT

Integrately

Intercom

Make

Connecting MySQL with Make.com (formerly Integromat)

Send WhatsApp Message Template from Make

Make Integration With Trigger Automation Block

How to send an email through Sendinblue by Make.com (formerly Integromat)

Get Opt-ins (Contacts) from Facebook Leads using Make

How to extract data from an external source with Make.com and use it in Landbot

OCR

Pabbly

Paragon

Pipedream

PDF Monkey

Store Locator Widgets

Xano

Zendesk

Send an Email with Brevo

How to integrate Landbot with n8n

How to Integrate Landbot with n8n using Webhooks

WhatsApp Channel

Getting started!

WhatsApp Testing

Build a WhatsApp Bot - Best Practices and User guide

Build a WhatsApp Bot - Best Practices for Developers

Types of Content and Media you can use in WhatsApp 🖼

1. WhatsApp Article Directory

WhatsApp Integration & Pricing FAQ

Adding & Managing your WhatsApp Channel

Meta Business Verification - Best Practices 🇬🇧

WhatsApp Number Deletion (WA Channel management)

Adding a WhatsApp number to your account

WhatsApp’s Messaging Policy: New Accepted Industry verticals

Meta processes guide: Meta Business Verification, Official Business Account (OBA) requests, Appeals

Additional Number integration: Limitations and Requirements (Number integration)

Existing WhatsApp Number Migration

Key Insights for Migrating to WhatsApp Business API Cloud

How to's, Compatibility & Workarounds

WhatsApp bots - Feature Compatibility Guide

WhatsApp - How to direct a user through a different bot flow on their second visit

WhatsApp - Get user out of error message loop

How to do Meta ads conversion tracking in WhatsApp bot using the Conversion API

Getting Subscribers: Opt-in, Contacts

How to get Opt-ins (Contacts) for your WhatsApp 🚀

WhatsApp Quality - Best Practices

Opt-In block for WhatsApp 🚀

Opt-in Check Block

Contact Subscribe Block: Manage Opt-ins and Audiences

New Contacts: Import, Segment, and Organize Easily

WhatsApp Channel Settings

Parent Bot/Linked Bot - Add a main bot to your WhatsApp number

WhatsApp Channel Panel (Settings)

Growth Tools for WhatsApp

Messaging and contacting your users

WhatsApp Campaigns 💌

WhatsApp's Message Templates

Audience block

WhatsApp Marketing Playbook: Best Practices for Leadgen

WhatsApp Error Logs: Troubleshooting guide

Audiences

WhatsApp for Devs

How to calculate the number of days between two selected dates (WhatsApp)

Creating a Loop in WhatsApp

Recognise the users input when sending a Message Template with buttons

Trigger Event if User Abandons Chat

Calculate Distances in WhatsApp

Send Automated Message Templates based on Dates

How to Let Users Opt-Out of Your WhatsApp Messages via API

reply from Slack: How to create an integration to allow agents reply WhatsApp users from Slack (with Node JS)

Set Up a Delay Timer in Bot

Notify Teammates of Chat via WhatsApp

Native blocks for WhatsApp

Reply Buttons block (WhatsApp)

Keyword Options 🔑 Assign keywords to buttons (WhatsApp and Facebook)

List Buttons Block (WhatsApp)

Collect Intent block

Send a WhatsApp Message Template from the Builder

WhatsApp Changes to Message Limits starting October 7, 2025

Other Channels - Messenger and APIChat

Facebook Messenger

The Facebook Messenger Ultimate Guide

Types of content you can use in Messenger bots 🖼

How to Preview a Messenger bot

API Chat (for Developers)

Human Takeover & Inbox

Metrics and Data Management

Metrics Section

How to export the data from your bots

Export data: How to open a CSV file

Bot's Analyze Section

For Developers & Designers

JavaScript and CSS

CSS and Design Customizations

Design Customizations

Advanced (Custom CSS & Custom JS)

Components CSS Library Index

Background Class CSS

Identify Blocks CSS

Buttons Class CSS

Header Class CSS

Media Class CSS

Message Bubble Class CSS

Miscellaneous Classes CSS

CSS Customization Examples: "Back to School" Theme

Get started guide for CSS Design in Landbot

CSS Customization Examples: Call To Action: WhatsApp

CSS Examples: Lead Gen

CSS Customization Examples: "Translucid"

CSS Customization Examples: "Minimalist" Theme

Dynamic Data CSS

Form Block CSS

CSS for Typewriter Effect

CSS Customization Examples: Carrd Embed Beginner

Dynamically Change a Bot's Background

Proactive Message Customizations with Javascript and CSS

Landbot v3 - Web CSS - RTL

CSS Customization Examples: Video Bubble

Dynamically Change Any Style

CSS Customization Examples: CV Template

Change Landbot custom CSS dynamically from parent page onload

Widget/Bubble Customizations with Javascript and CSS

JavaScript

How to change Avatar dynamically

Javascript in WhatsApp

Landbot JavaScript Integration

Different ways to format numbers with JS

How to display an HTML Table and a List in Landbot v3 web

Trigger a Global Keyword with JS (web v3)

Create Dynamic Shopping Cart with JS and CSS

Add a Chart (with Chart JS library) in your Landbot

Different ways to format numbers with JS (WhatsApp)

Pop up modal to embed third party elements

Landbot API

Send WhatsApp Messages with Landbot API

How to "send" a user to a specific point in the flow with Javascript and with the API

APIs

Get Opt-ins (Contacts) using Landbot API

MessageHooks - Landbot Webhooks

Resume flow based on external process with Landbot API (Request, Set, Go)

Tracking

Google Analytics - Track Events (Not embedded)

Google Analytics - Track Events (Embedded)

Meta Pixel - Track Events (only Embedded)

How Track Google Analytics Events in Landbot with Google Tag Manager (GTM)

Google Adwords - How to track Google Adwords in Landbot

Set a timer to get the time spent during the flow

Workarounds and How To's

Workflows

How to build an event registration Landbot (to be used in one screen by many attendees) (web only)

How to let user select a time of booking (with a minimum 45 minutes notice)

Send Files Hosted in Landbot to Your Google Drive with Make

Two-Step Email Verification

Fixing Web Bot Loading Issues for iOS Devices in Meta Campaigns with Disclaimers

Progress Bar Workaround

How to Add User Verification to Your Chatbot

How to set up questions with a countdown

HTML Template for Emails

Creating a Simple Cart in WhatsApp

Creating Masks for User Input (2 examples)

More Topics

Table of Contents

- All Categories

- AI in Landbot

- Custom AI Integrations

- Connect OpenAI Assistant with Landbot

Connect OpenAI Assistant with Landbot

Updated

by Cesar Banchio

Updated

by Cesar Banchio

Web Bots

With the new OpenAI Assistant, we are empowered to design and deploy intelligent assistants endowed with the capability to access a repository of documents that we have furnished, thereby enabling them to effectively answer inquiries posed by users, enhancing the efficiency and effectiveness of their responses to user queries.

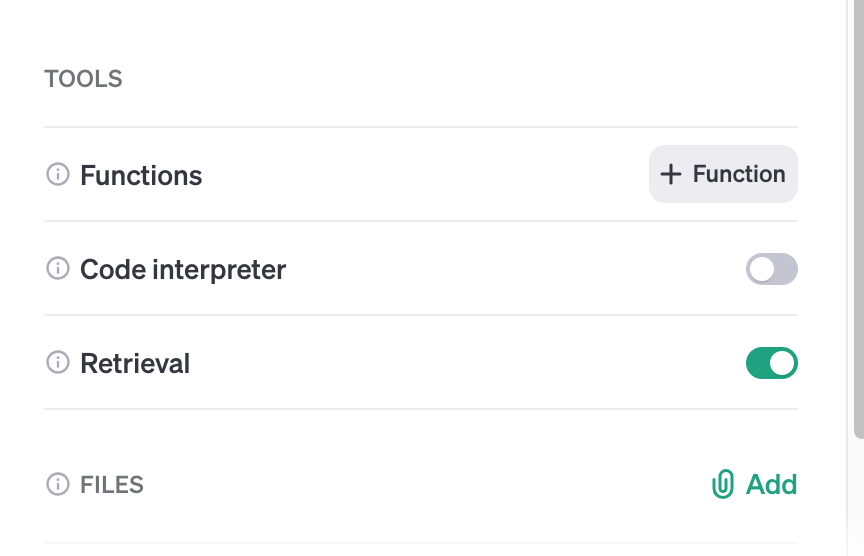

First of all, we need to create an assistant on OpenAI. To create one, you can log in to your openAI account and go to this URL https://platform.openai.com/assistants where you will provide the assistant instructions, and any documentation to use to answer queries. If you want the assistant to fetch the documentation provided, you need to select the option Retrieval on Tools:

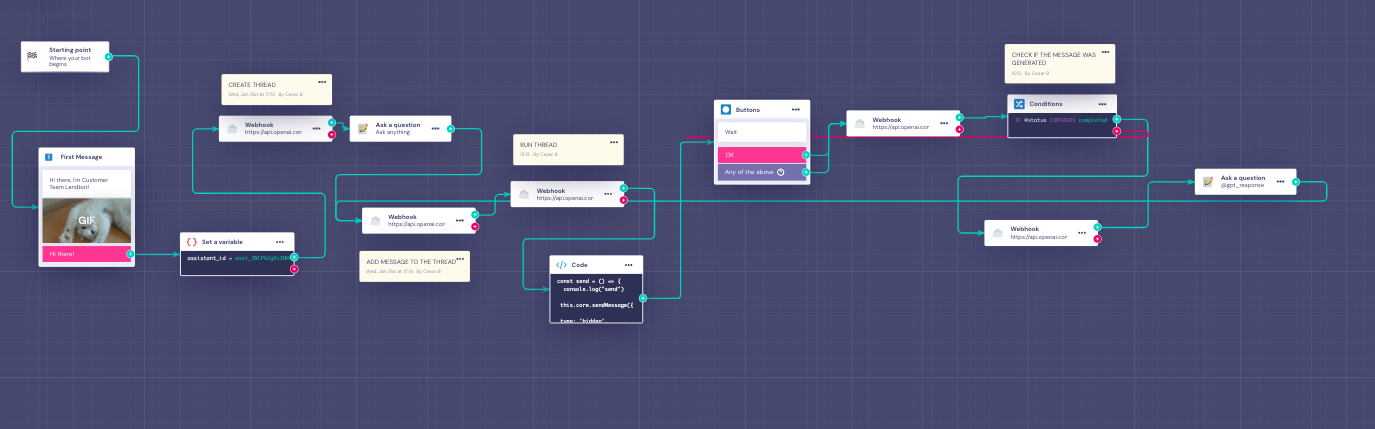

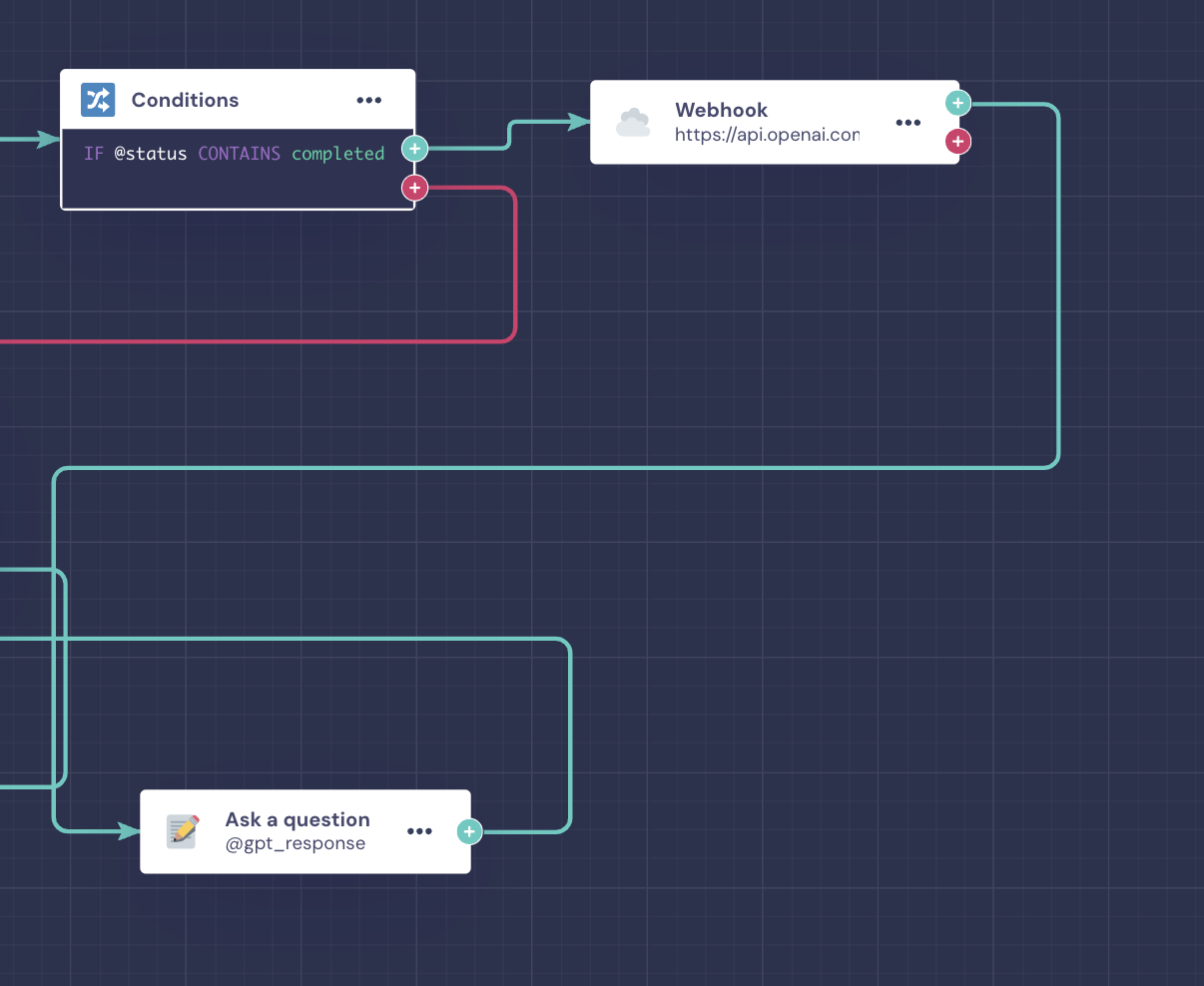

We can reach these assistants via API and therefor, integrate them to our bot. To do so, is very simple and will consist on a flow like the following:

For Assistant V1

You can download the template for Web Bots with the flow structure here.

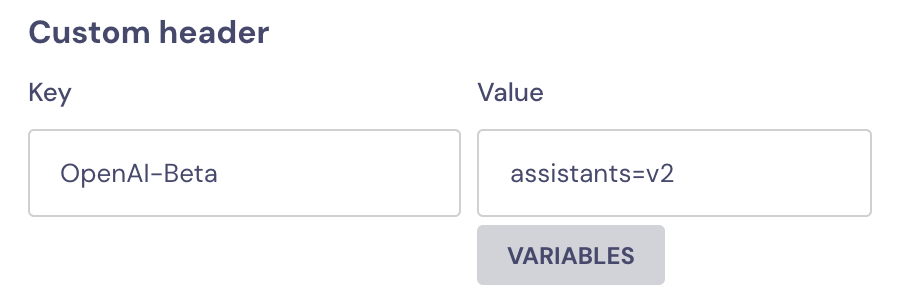

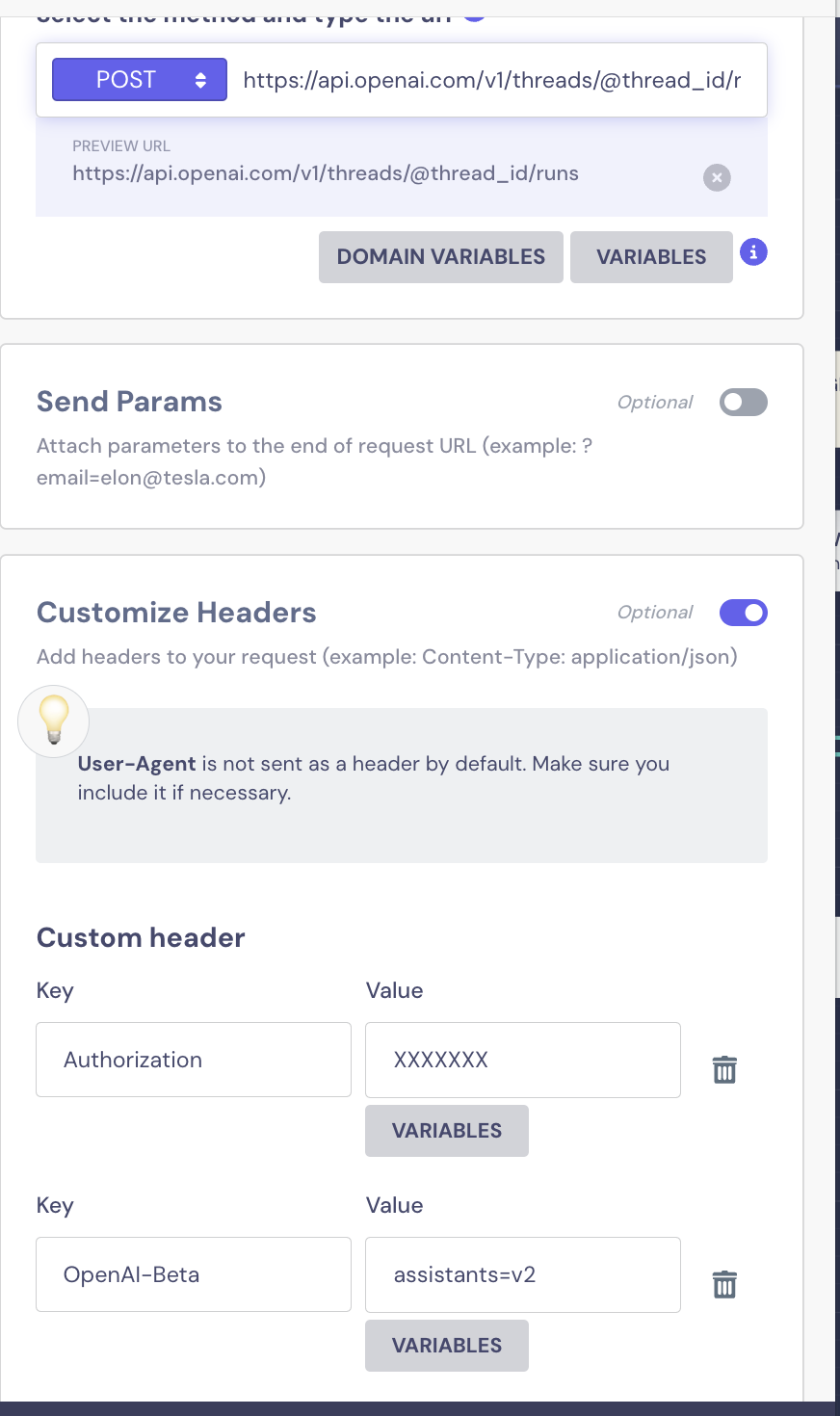

For Assistant V2

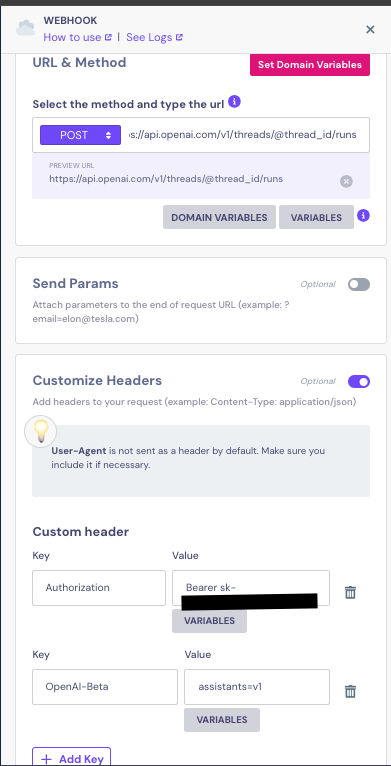

You can use the same template as above, but you must change the reference to point to v2 in all Webhook headers, as in the following example:

Let's review each Webhook block

1st Webhook

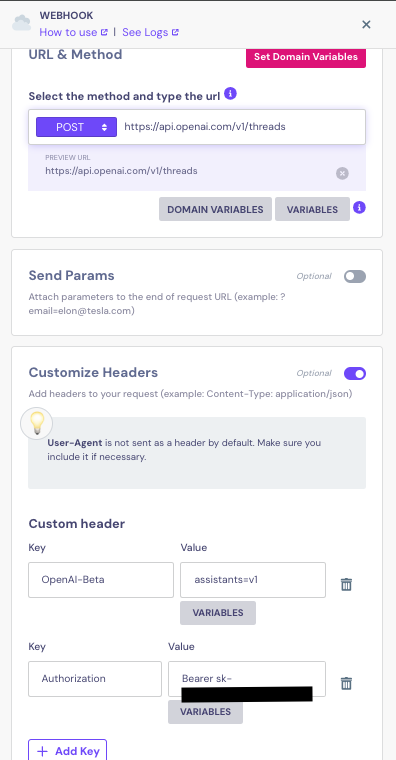

On the first one, we create a thread, where all the user's messages will be held. This works as a conversation history. We will make a POST request to this endpoint https://api.openai.com/v1/threads with the following headers:

You will need to test the request so that you can save the thread id into a variable:

2nd Webhook

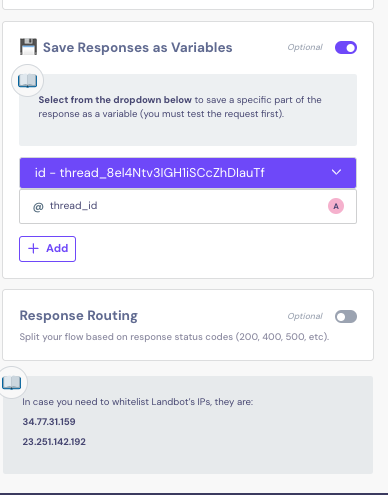

Next webhook will make a POST request to this endpoint https://api.openai.com/v1/threads/@thread_id/messages (this contains the thread id saved from the previous webhook call)

The request will contain the same headers as the previous one

with the following request body:

{

"role": "user",

"content": "@user_text"

}

The variable @user_text holds the user's query.

3rd Webhook

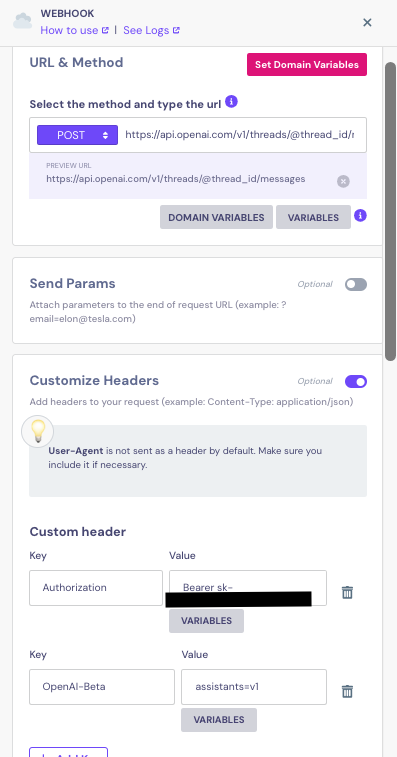

Next webhook will run the thread with the AI assistant. This is a POST request to this endpoint https://api.openai.com/v1/threads/@thread_id/runs with the same headers as the previous ones.

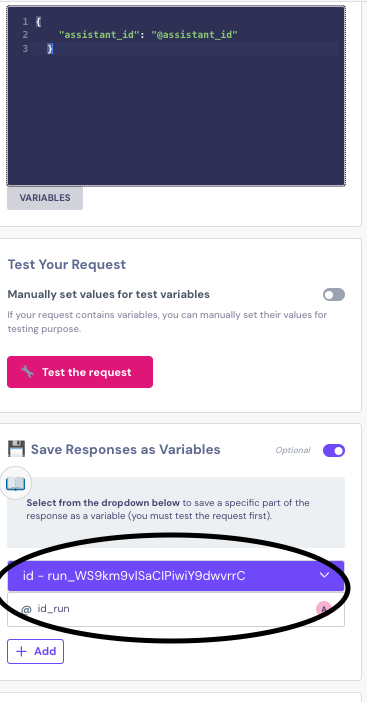

with the following request body:

{

"assistant_id": "@assistant_id"

}

We will need to test the request to save the run_id to check whether the assistant is done generating the response and sabe this into a variable:

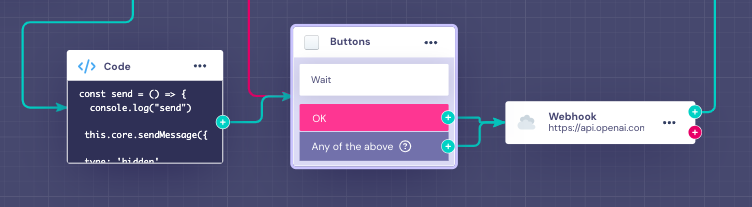

The next part is a delay that checks whether run is completed. This consists of a code block with the following snippet:

const send = () => {

this.core.sendMessage({

type: 'hidden',

payload: "1" })

}

setTimeout(send, 5000);

and after a button block which we will hide with CSS:

The CSS to hide the block is the following:

[data-block="BlockReference_0"] {

display: none!important;

}

Replace BlockReference with the block id of the button block.

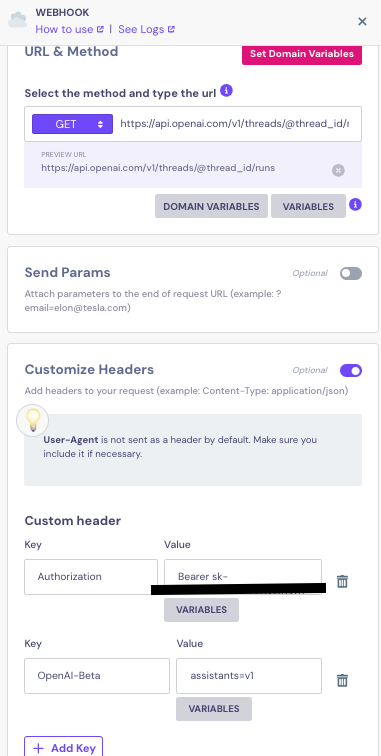

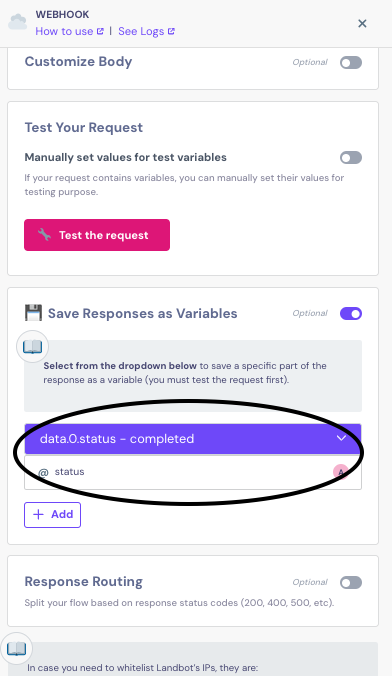

4th Webhook

Next is the webhook where we check the status of the run. This is a GET request to this endpoint https://api.openai.com/v1/threads/@thread_id/runs with the same headers:

We will need to test the request as well to save the status property of the response in a variable and check wheteher this is completed or not.

5th Webhook

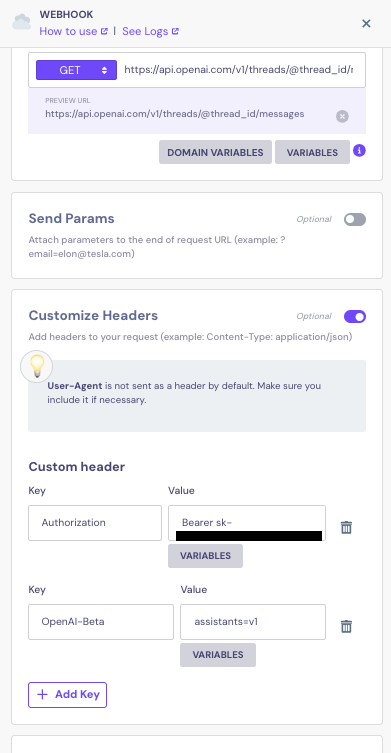

The final webhook will be triggered when the status = "completed" and it will be to retrieve the response generated by our assistant. This is a GET request to this endpoint https://api.openai.com/v1/threads/@thread_id/messages with the same headers

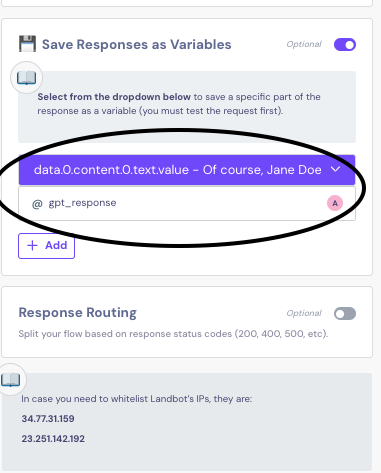

We will also need to test the request to save the part of the response that contains the Assistant response:

WhatsApp Bots

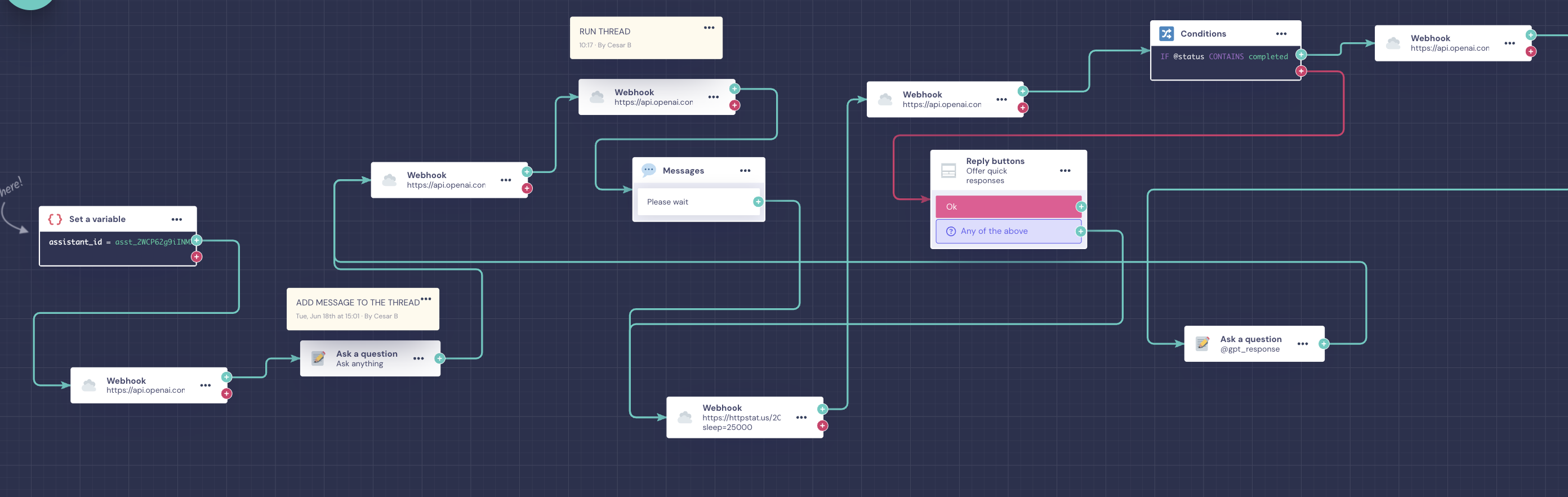

The flow is slightly different for WhatsApp:

The first part will be the same, so the first 5 blocks up to the code block. After the webhook that runs the thread, so this one:

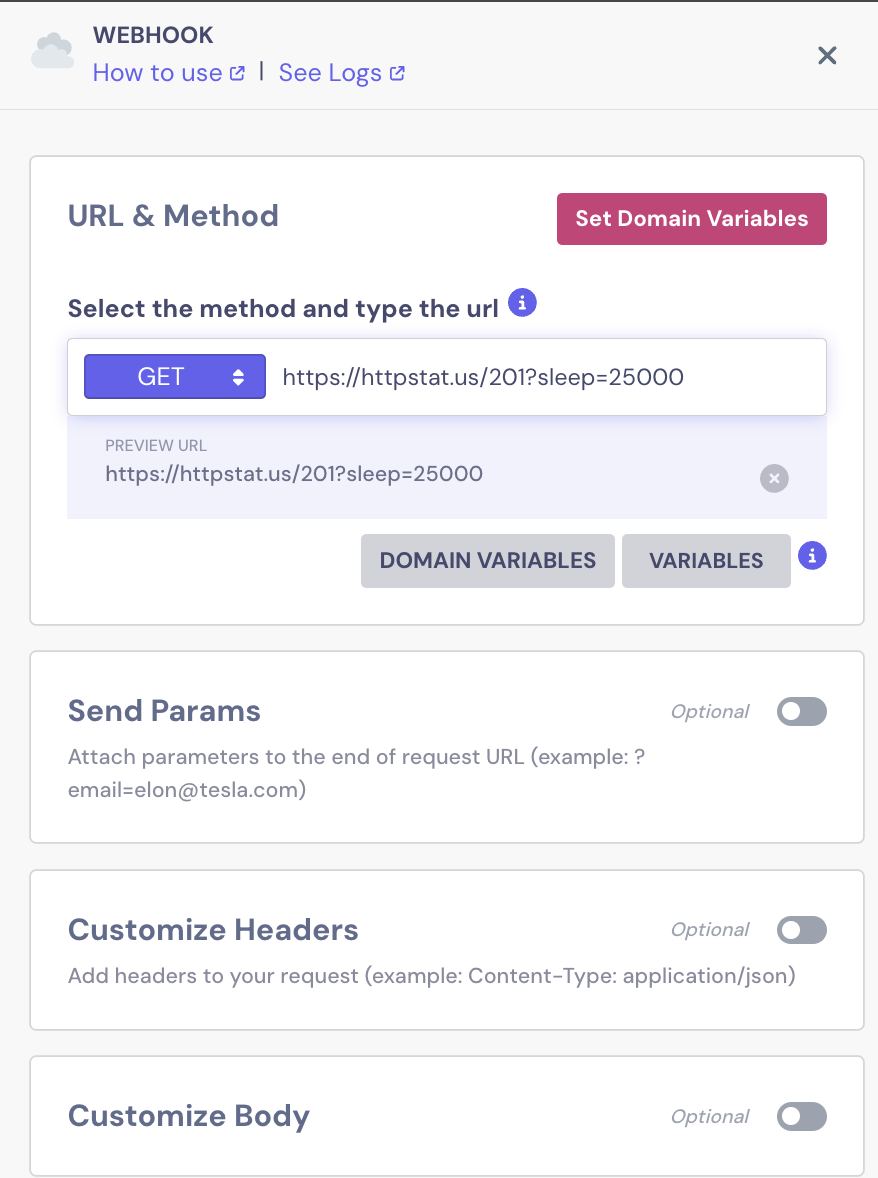

We will add a message block to inform the user to wait. After that we will set up a webhook to gerenate a delay using this workaround

Keep in mind that the webhook timeout is 30 seconds, so the delay has to be less than that, on this example, we set a delay of 25 seconds. This is to give time to the AI to generate the response.

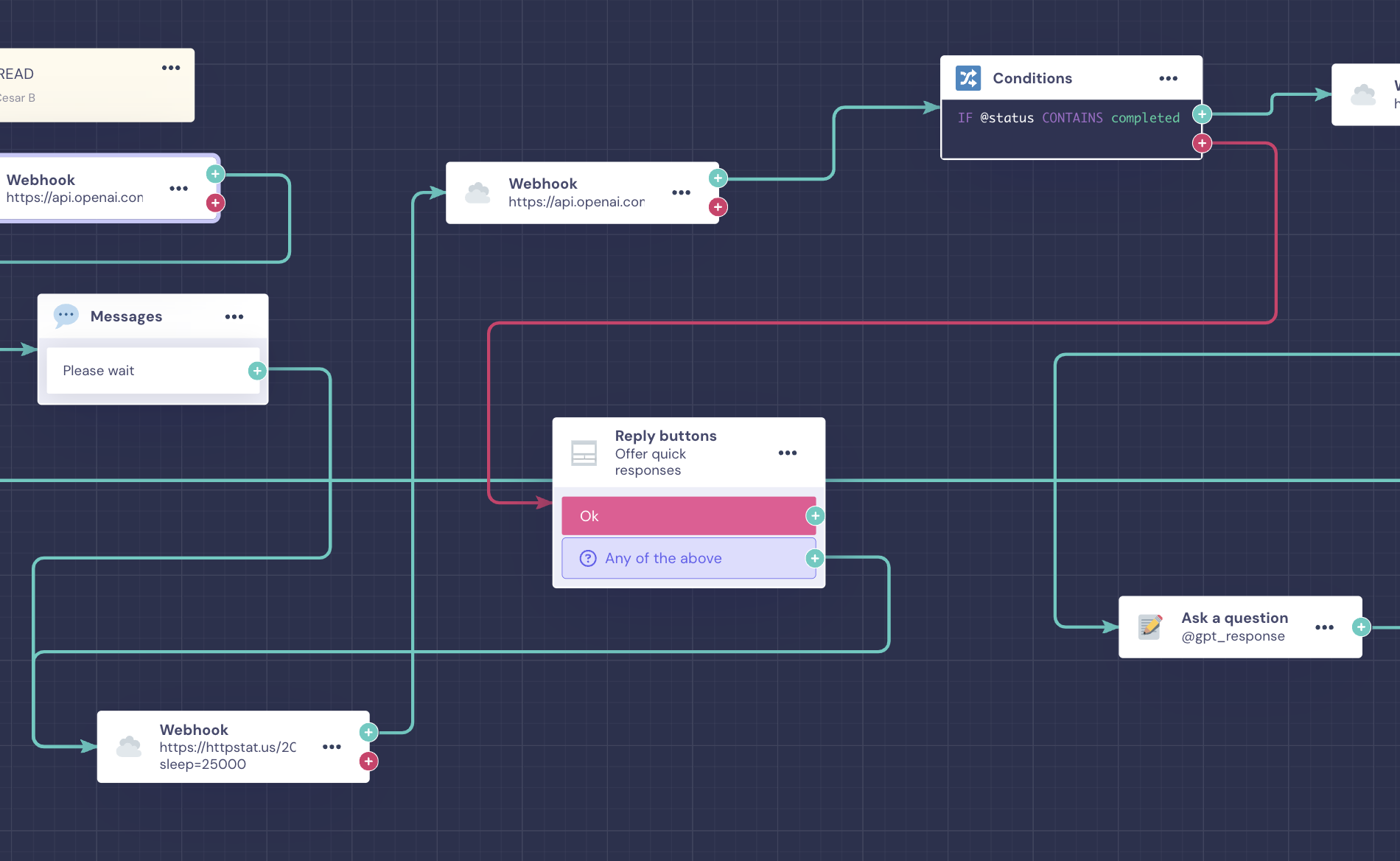

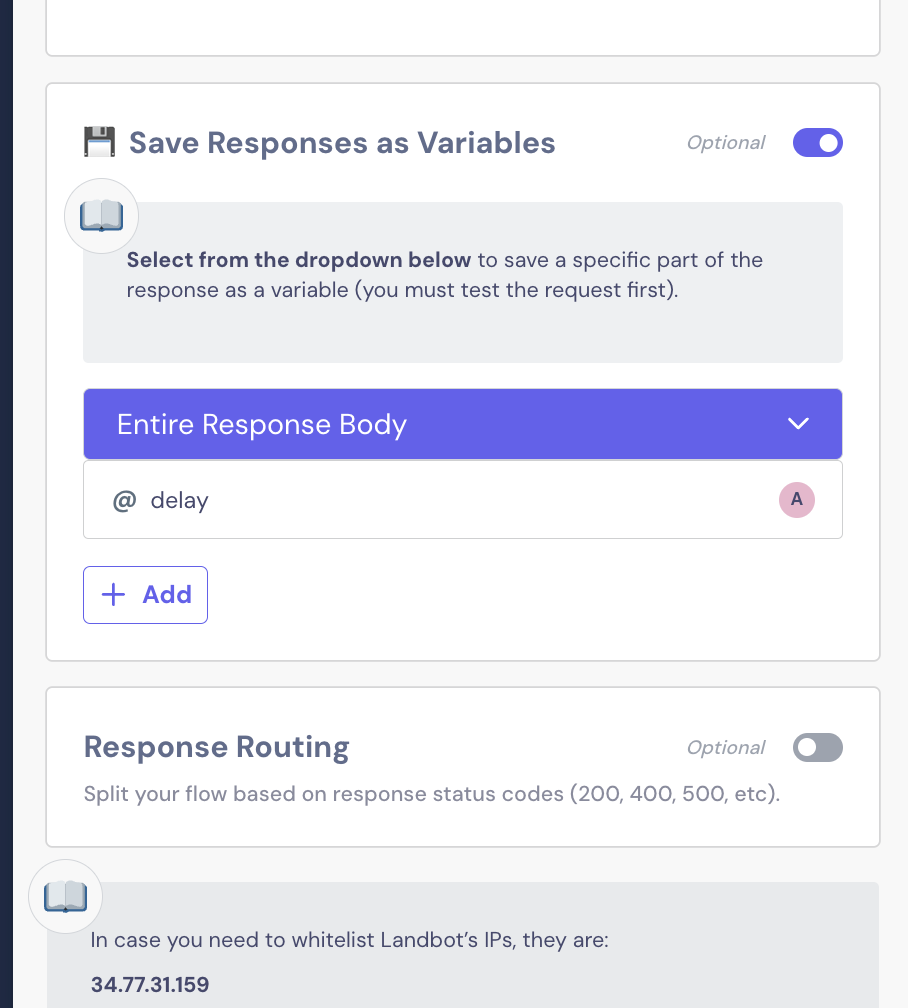

After the delay webhook, we set up the 4th Webhhok and then the conditions to see if the status of the run is completed.

If the status of the run is completed, then we set up the 5th Webhook to retrieve the AI's response and then with a question block provide it to the user (same as the web bot flow)

If the status is not completed, then we need to add a block with user input, as it involves a loop. On this example, we add a reply button block and then connect this block to the block that generates a delay